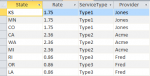

I have a table that has multiple values in a field separated by commas (KS, MN, MO). I wrote a VB script that separates the values and inserts them into separate records in a new table. I’m calling the VB script from a query in MS Access that reads the records in the source table. This works perfectly when I tested on a handful of records. However, when I have a large dataset it’s only updating the records that show on the screen when I open the query in datasheet view. As I scroll down the through the records in the query, it continues to load data into the destination table (via VB) as it appears on the screen.

I’m fairly new to access… is there a way to force the query to process all of the records. or a different way to call the VB script? I’d like to put query in a marco and have it run when a button is clicked.

I’m fairly new to access… is there a way to force the query to process all of the records. or a different way to call the VB script? I’d like to put query in a marco and have it run when a button is clicked.