isladogs

Access MVP / VIP

- Local time

- Today, 20:57

- Joined

- Jan 14, 2017

- Messages

- 19,351

Over the years, I have used various functions to measure time intervals: Timer, GetSystemTime, GetTickCount.

Each of these can give times to millisecond precision though I normally round to 2 d.p. (centiseconds).

This is because each function is based on the system clock which is normally updated 64 times per second – approximately every 0.0156 seconds

When I started my series of speed comparison tests, I initially used the GetSystemTime function.

However, some occasional inconsistencies led me to revert to the very simple Timer function.

Recently I was alerted to the timeGetTime API by UA member ADezii with these comments taken from the Access 2000 Developers Handbook pg 1135-1136:

Part of this comment is no longer accurate in that the Timer function can measure to milliseconds.

However, as I had never used the timeGetTime API, I decided to compare the results obtained using each of the methods using two simple tests:

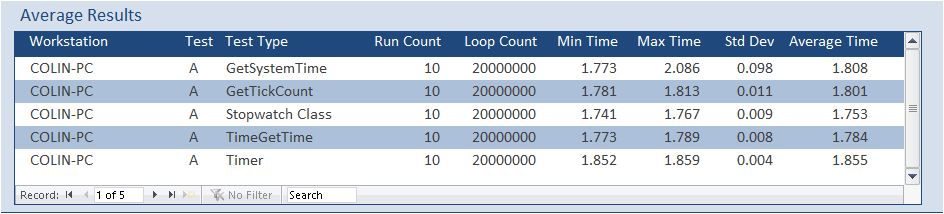

• Looping through a simple square root calculation repeatedly (20000000 times)

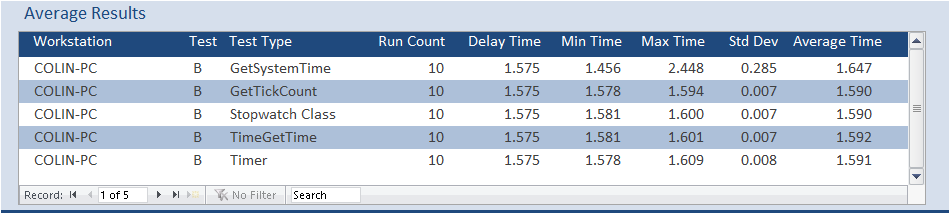

• Measuring the time interval after a specified time delay setup using the Sleep API (1.575 s)

I also added a Stopwatch class to the timer comparison tests (again thanks to ADezii for this code)

Obviously, as with any timer tests, other factors such as background windows processes, and overall CPU load will lead to some natural variation. To minimise the effects of those, I avoided running any other applications at the same time and ran each test 10 times. Furthermore, the test order was randomised each time ... just in case. The average times were calculated along with the minimum/maximum times and standard deviation for each test.

As the results are all based on the system clock, I expected the results to be similar in each case.

However, it seemed reasonable that certain functions would be more efficient to process

For these tests, the main requirement is certainly not to determine which gives the smallest time.

Here the aim is to achieve consistency so that repeated tests should provide a small standard deviation

These are the summary results from one PC:

The full results are in the attached PDF.

This is a summary of my conclusions from the PDF:

Overall, I would suggest that both Timer and TimeGetTime are reliable. Each had minimal variation compared to the other methods

Bearing in mind that the Timer function is based on the time elapsed since midnight whereas timeGetTime runs for 49 days before resetting, timeGetTime should definitely be used if the timing tests are likely to cross midnight or last longer than 24 hours.

However, for smaller time intervals on a reasonably powerful PC, I don’t think there is much advantage in one method compared to the other

Stopwatch class works well but requires additional code compared to the Timer or TimeGetTime methods

GetTickCount is satisfactory but perhaps not as reliable as other methods

GetSystemTime has greater variation and occasionally has spurious results - overall it is unreliable and should not be used

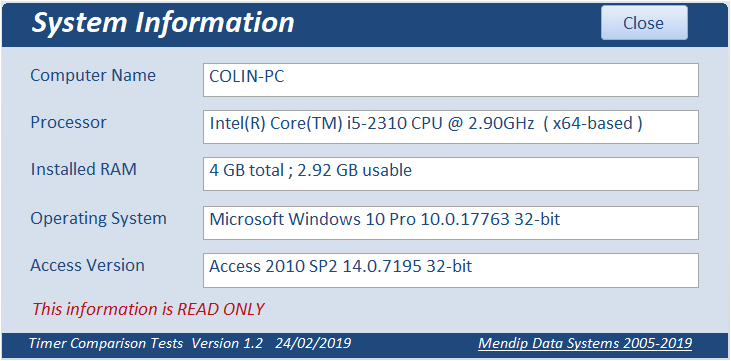

NOTE: The utility also includes code to obtain system info - mainly using WMI - this is useful for benchmarking

I hope this is useful to others .... if only so you don't need to run the tests yourself !!!

Each of these can give times to millisecond precision though I normally round to 2 d.p. (centiseconds).

This is because each function is based on the system clock which is normally updated 64 times per second – approximately every 0.0156 seconds

When I started my series of speed comparison tests, I initially used the GetSystemTime function.

However, some occasional inconsistencies led me to revert to the very simple Timer function.

Recently I was alerted to the timeGetTime API by UA member ADezii with these comments taken from the Access 2000 Developers Handbook pg 1135-1136:

If you're interested in measuring elapsed times in your Access Application, you're much better off using the timeGetTime() API Function instead of the Timer() VBA Function. There are 4 major reasons for this decision:

1. timeGetTime() is more accurate. The Timer() Function measure time in 'seconds' since Midnight in a single-precision floating-point value, and is not terribly accurate. timeGetTime() returns the number of 'milliseconds' that have elapsed since Windows has started and is very accurate.

2. timeGetTime() runs longer without 'rolling over'. Timer() rolls over every 24 hours. timeGetTime() keeps on ticking for up to 49 days before it resets the returned tick count to 0.

3. Calling timeGetTime() is significantly faster than calling Timer().

4. Calling timeGetTime() is no more complex than calling Timer(), once you've included the proper API declaration

Part of this comment is no longer accurate in that the Timer function can measure to milliseconds.

However, as I had never used the timeGetTime API, I decided to compare the results obtained using each of the methods using two simple tests:

• Looping through a simple square root calculation repeatedly (20000000 times)

• Measuring the time interval after a specified time delay setup using the Sleep API (1.575 s)

I also added a Stopwatch class to the timer comparison tests (again thanks to ADezii for this code)

Obviously, as with any timer tests, other factors such as background windows processes, and overall CPU load will lead to some natural variation. To minimise the effects of those, I avoided running any other applications at the same time and ran each test 10 times. Furthermore, the test order was randomised each time ... just in case. The average times were calculated along with the minimum/maximum times and standard deviation for each test.

As the results are all based on the system clock, I expected the results to be similar in each case.

However, it seemed reasonable that certain functions would be more efficient to process

For these tests, the main requirement is certainly not to determine which gives the smallest time.

Here the aim is to achieve consistency so that repeated tests should provide a small standard deviation

These are the summary results from one PC:

The full results are in the attached PDF.

This is a summary of my conclusions from the PDF:

Overall, I would suggest that both Timer and TimeGetTime are reliable. Each had minimal variation compared to the other methods

Bearing in mind that the Timer function is based on the time elapsed since midnight whereas timeGetTime runs for 49 days before resetting, timeGetTime should definitely be used if the timing tests are likely to cross midnight or last longer than 24 hours.

However, for smaller time intervals on a reasonably powerful PC, I don’t think there is much advantage in one method compared to the other

Stopwatch class works well but requires additional code compared to the Timer or TimeGetTime methods

GetTickCount is satisfactory but perhaps not as reliable as other methods

GetSystemTime has greater variation and occasionally has spurious results - overall it is unreliable and should not be used

NOTE: The utility also includes code to obtain system info - mainly using WMI - this is useful for benchmarking

I hope this is useful to others .... if only so you don't need to run the tests yourself !!!