You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Left Join vs Inner Join

- Thread starter Guus2005

- Start date

Interesting question after 15 years of working with Access.

I think in both cases the keys of the two tables are compared with each other using CROSS JOIN. With the LEFT JOIN, all keys of the left table are taken over in differentiation, with the INNER JOIN only the matching ones. That makes practically no significant difference.

That depends on the data. If only the same keys occur in both tables, the result is identical.same result

Why?Inner joins are faster

I think in both cases the keys of the two tables are compared with each other using CROSS JOIN. With the LEFT JOIN, all keys of the left table are taken over in differentiation, with the INNER JOIN only the matching ones. That makes practically no significant difference.

- Local time

- Today, 00:54

- Joined

- Oct 29, 2018

- Messages

- 22,824

I was about to say the same thing. Perhaps use Jet Show Plan?I can feel a Colin speedtest coming on

For a realistic speed test, the query used should only show the keys in the SELECT part.

If additional fields are used, different content is reloaded due to the different functionality, so with the LEFT JOIN there may be some more. Then you can expect differences.

If additional fields are used, different content is reloaded due to the different functionality, so with the LEFT JOIN there may be some more. Then you can expect differences.

A measurement should make sense. You should define for yourself whether you mean a comparison between OUTER JOIN and INNER JOIN or a comparison of queries that contain an OUTER JOIN or an INNER JOIN. I was orientated towards the first case, and I have to model it a bit to compare the same. If thick memo fields or other masses of data are loaded on one side, there are already explainable differences.

Of course, you could also model it in such a way that you use two tables in a 1:n relationship with referential integrity set, where there are foreign keys in the secondary table for every primary key in the primary table, so that the result and thus the loading of data match.

The already mentioned different functionality of the JOIN variants in various different practical tasks will lead to differences, but where measurements are then value-free.

Of course, you could also model it in such a way that you use two tables in a 1:n relationship with referential integrity set, where there are foreign keys in the secondary table for every primary key in the primary table, so that the result and thus the loading of data match.

The already mentioned different functionality of the JOIN variants in various different practical tasks will lead to differences, but where measurements are then value-free.

isladogs

Access MVP / VIP

- Local time

- Today, 08:54

- Joined

- Jan 14, 2017

- Messages

- 19,505

No need as already done long ago!I can feel a Colin speedtest coming on

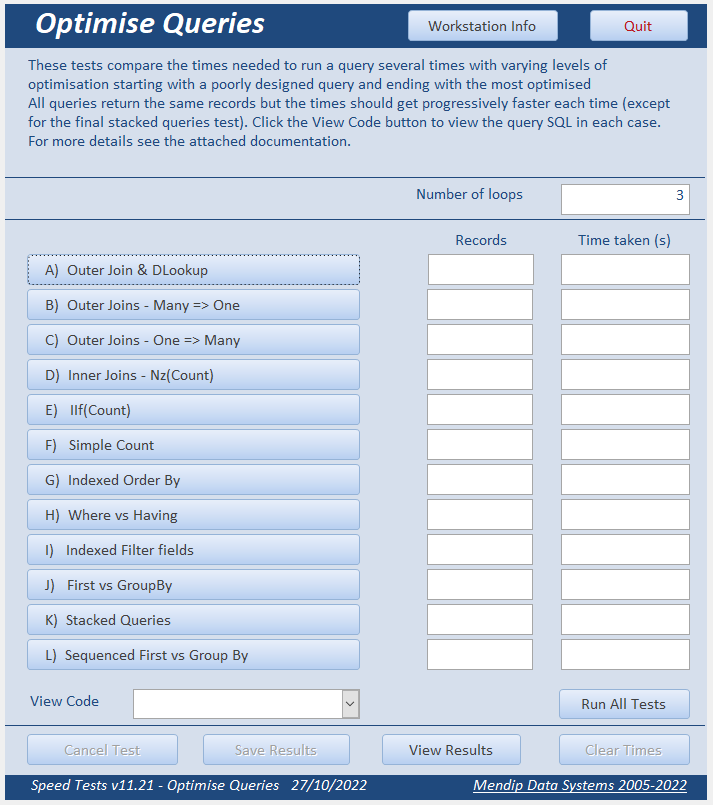

This formed a small part of the series of tests in my Optimising Queries article:

Speed Comparison Tests 8

This article covers various methods of optimising queries and the use of the JET ShowPlan feature. It is the eighth in a series of articles discussing various tests done to compare the efficiency of different approaches to coding. Example databases are provided so the tests can be done on your...

JET ShowPlan outputs provided in each case

It is indeed true that running inner joins is significantly faster than outer joins (where the output data is identical in each case)

Speed Comparison Tests by isladogs

Measurements are convincing in the result, even if the queries do a lot more than just performing JOINs. Since the execution plans are also different, I am converted in my opinion. Thanks to isladogs for the documents provided.The intermediate result after the JOINs before filtering and grouping is different, but that's not enough to explain the rather large difference.where the output data is identical in each case

Optimise Queries

The following variant could be included in a test:

SQL:

SELECT

D.PupilID,

FIRST(D.Surname) AS LastName,

FIRST(D.Forename) AS FirstName,

FIRST(D.YearGroup) AS YearGp,

R.Code,

FIRST(C.Description) AS CodeType,

FIRST(R.MeritPts) AS Merits,

FIRST(R.DeMeritPts) AS Demerits,

COUNT(*) AS Incidents

FROM

(

SELECT

PupilID,

Surname,

Forename,

YearGroup

FROM

PupilData

WHERE

DateOfBirth BETWEEN #1/1/2005# AND #12/31/2005#

) AS D

INNER JOIN

(PRCodes AS C

INNER JOIN

(

SELECT

PupilID,

Code,

MeritPts,

DeMeritPts

FROM

PRecords

WHERE

DateOfIncident BETWEEN #1/1/2018# AND #12/30/2018#

) AS R

ON C.Code = R.Code

)

ON D.PupilID = R.PupilID

GROUP BY

D.PupilID,

R.Code

ORDER BY

FIRST(D.Surname),

FIRST(D.Forename)isladogs

Access MVP / VIP

- Local time

- Today, 08:54

- Joined

- Jan 14, 2017

- Messages

- 19,505

@ebs17

Thanks for the suggestion which I've added at the end of the list of tests.

It only took a few minutes to edit the example app

As its obviously a variation on Test J, I've called it Sequenced First vs Group By

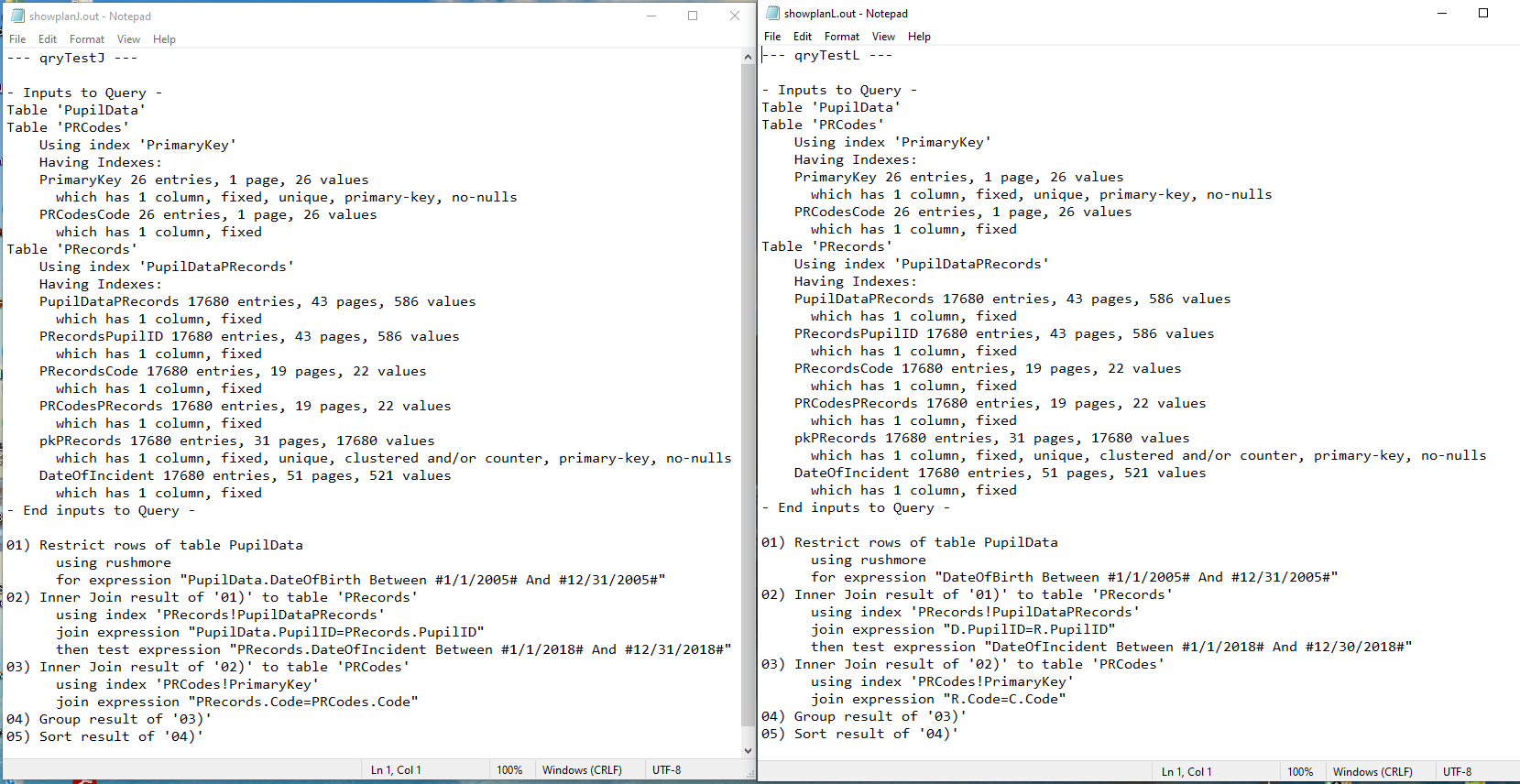

I've run some tests and also checked the JET ShowPlan output for it compared to Test J.

Your final sentence gives an indication of what you think will happen ...

However, before I tell you the results, I'd be interested in your views on how it will compare in terms of speed with test J.

Similarly, any thoughts you may have on how the two execution plans differ

Thanks for the suggestion which I've added at the end of the list of tests.

It only took a few minutes to edit the example app

As its obviously a variation on Test J, I've called it Sequenced First vs Group By

I've run some tests and also checked the JET ShowPlan output for it compared to Test J.

Your final sentence gives an indication of what you think will happen ...

However, before I tell you the results, I'd be interested in your views on how it will compare in terms of speed with test J.

Similarly, any thoughts you may have on how the two execution plans differ

I expect the same to better speed. Why: A JOIN is more complex than a filter on a field. Prof. Dr. Felix Naumann (Hasso Plattner Institute, University of Potsdam) put the difference at a flat rate of 3:1. Indices would either have to be used equally for both operations or not.

So you would do the lighter operation of filtering first to get smaller tables, which then does the more involved operation of JOIN over fewer records.

I hope I can give the SQL optimizer a good template that he understands and can implement profitably.

So you would do the lighter operation of filtering first to get smaller tables, which then does the more involved operation of JOIN over fewer records.

I hope I can give the SQL optimizer a good template that he understands and can implement profitably.

isladogs

Access MVP / VIP

- Local time

- Today, 08:54

- Joined

- Jan 14, 2017

- Messages

- 19,505

Thanks for the speedy reply which was what I thought you were expecting.

I don;t know anything about the person you quoted so can only go on my own experience & results.

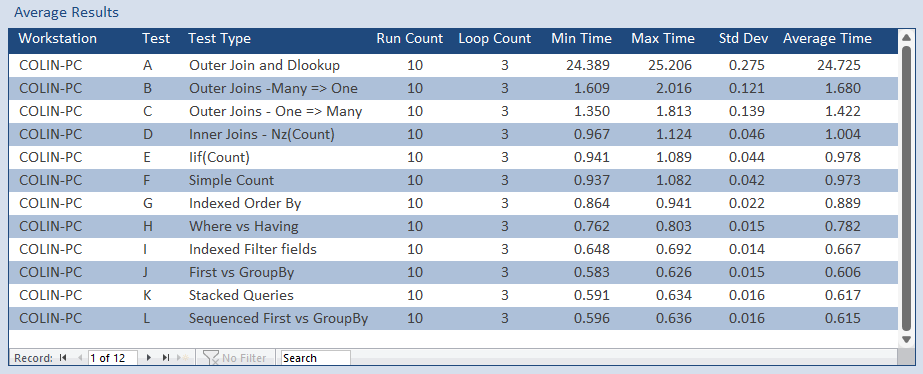

Inevitably the results are similar as its basically the same query

In fact, the query execution plans are identical - remember that Access will always attempt to optimise the query execution whether written as in the original test (J) or your variant (L)

.

.

So you might expect the speed test results would be identical.

WRONG!

I ran all tests again 10 times ...

The new version is consistently slightly slower by about 0.01s (approx 1.5%)

A small but measurable difference. So why is that?

I have a theory which I'm going to test but in the meantime . . . why do you think it might be slower?

I am attaching the database & the 2 show plan files so you can test for yourself

I don;t know anything about the person you quoted so can only go on my own experience & results.

Inevitably the results are similar as its basically the same query

In fact, the query execution plans are identical - remember that Access will always attempt to optimise the query execution whether written as in the original test (J) or your variant (L)

So you might expect the speed test results would be identical.

WRONG!

I ran all tests again 10 times ...

The new version is consistently slightly slower by about 0.01s (approx 1.5%)

A small but measurable difference. So why is that?

I have a theory which I'm going to test but in the meantime . . . why do you think it might be slower?

I am attaching the database & the 2 show plan files so you can test for yourself

Attachments

Guus2005

AWF VIP

- Local time

- Today, 09:54

- Joined

- Jun 26, 2007

- Messages

- 2,636

Hi Pat, never seen a hostile response from you. But here we are.

I didn't create the query and yes i know that you should focus on what you want to achieve. I was suprised when the outcome for both joins was the same and i needed an extra arguments to convince the person who wrote the offending query to change the join. I even added a picture with venn-diagrams showing the result of a particular join.

The problem was fixed. Thanks for your input

I didn't create the query and yes i know that you should focus on what you want to achieve. I was suprised when the outcome for both joins was the same and i needed an extra arguments to convince the person who wrote the offending query to change the join. I even added a picture with venn-diagrams showing the result of a particular join.

The problem was fixed. Thanks for your input

This is a special case with a certain data situation. But you shouldn't rely on this. If additional records are added to a table, the output can change very quickly. At some point does the person want to immediately change the query design because of new data?outcome for both joins was the same

I hope you don't mind if we have hijacked your topic here for a more in-depth discussion.

I have no real idea. JOIN and WHERE are comparative operations and are equivalent in the order in which the query is processed. This gives the SQL optimizer a choice of where to start. This is influenced by the subqueries I have shown, because now the queries in the FROM must first be evaluated in order. The show plan is visibly the same here, but maybe a lot more will happen internally.why do you think it might be slower?

The variants and optimizations relate to a specific task (existing tables, data contained, request for output). Even small changes to one of these points can necessitate very large changes, so the best solution found can only be determined by specific circumstances.

If, for example, the year of birth was not filtered, you could filter directly in PRecords for the year and immediately group using the two foreign keys and count the data records. This would have reduced the number of records from 17680 to 1717, and only these would need to be joined to the two primary tables.

The principle remains, therefore, to reduce data volumes quickly and at an early stage, if possible with lean operations. Best possible index utilization should be self-evident.

I see other construction sites that may be more productive. The index planning in your tables is not yet optimal. Always check the index window!

PupilData: Why is there an index on Forename? I would find a composite index on Surname and Forename useful, e.g. for sorting.

PRCodes: Index on Code is duplicate. The second index is of no use, but it also has to be managed and (theoretically) slows down write operations.

PRecords: Index on Code and PupilID are superfluous because they are duplicated. When you create a 1:n relationship with referential integrity set, the foreign key is automatically indexed because that's so important. However, this index is not superficially visible. However, you can see it via code-controlled reading in the table definition. Again, a composite index on PupilID and Code would make sense, in that order, because PupilID has the greater variability in values.

This index could be used in the grouping in the query using the two foreign key fields from PRecords:

SQL:

GROUP BY R.PupilID, R.CodeThe order of the JOIN groups in the FROM part could also play a role. A colleague said: We know that the jet optimizer is not the smartest. There might be some help there. This immediately gives rise to the idea that the show plan of the same query with the same tables could look different in a different database management system.

Last edited:

isladogs

Access MVP / VIP

- Local time

- Today, 08:54

- Joined

- Jan 14, 2017

- Messages

- 19,505

There are some interesting points in your lengthy reply.

The tables are cut down versions taken from the demo version of one of my commercial apps for schools which has over 300 tables and well over 1000 queries (saved or SQL statements in VBA).

The indexes therefore reflect wider usage rather than for this example app alone.

However, you are correct that there are some duplicate indexes that I forgot to remove

In general, subqueries are slower and should be avoided if another better method exists.

It may be that any additional optimisation caused by your 'sequencing' is negated by the presence of subqueries.

I wonder also whether aliasing has any impact. If so, likely to be small.

You used Count(*) whereas I used Count(FieldName) which is probably slower.

I did try to test the effect of aliasing but to do so would have meant changing your query, so I left it for now.

The actual query is close to being fully optimised, so it probably isn't worth spending any more time on that.

However, a forthcoming speed test will compare subqueries, non-equi joins and more besides...possibly aliasing as well

If interested, you can find the complete list of published speed tests at

www.isladogs.co.uk

www.isladogs.co.uk

BTW Although the demo has an Access BE, the actual app for schools uses a SQL Server BE and has been optimised for that.

The tables are cut down versions taken from the demo version of one of my commercial apps for schools which has over 300 tables and well over 1000 queries (saved or SQL statements in VBA).

The indexes therefore reflect wider usage rather than for this example app alone.

However, you are correct that there are some duplicate indexes that I forgot to remove

In general, subqueries are slower and should be avoided if another better method exists.

It may be that any additional optimisation caused by your 'sequencing' is negated by the presence of subqueries.

I wonder also whether aliasing has any impact. If so, likely to be small.

You used Count(*) whereas I used Count(FieldName) which is probably slower.

I did try to test the effect of aliasing but to do so would have meant changing your query, so I left it for now.

The actual query is close to being fully optimised, so it probably isn't worth spending any more time on that.

However, a forthcoming speed test will compare subqueries, non-equi joins and more besides...possibly aliasing as well

If interested, you can find the complete list of published speed tests at

Speed Comparison Tests

This is a series of articles discussing various tests done to compare the efficiency of different approaches to coding. Example databases are provided so the tests can be done on your own workstations

BTW Although the demo has an Access BE, the actual app for schools uses a SQL Server BE and has been optimised for that.

Can you say that in general?subqueries are slower

I think you have to differentiate whether the subquery is in the FROM, SELECT or WHERE part. In the SELECT and WHERE part you often have correlated subqueries. These are not only executed once, but in the extremum per data record of the main query, which multiplies an effort. In this case, you don't need to be surprised about longer runtimes.

I see a subquery in the FROM part as uncritical, it is only executed once. You can also cascade multiple times. It would be important that there are no superfluous operations such as sorting.

isladogs

Access MVP / VIP

- Local time

- Today, 08:54

- Joined

- Jan 14, 2017

- Messages

- 19,505

One very important point I completely forgot to mention when I posted the query execution plans in post #16.

JET ShowPlan doesn't handle subqueries at all. So it only gave a partial picture of what was happening with the subquery version.

I agree with Pat's comments in the last post. Subqueries are inefficient in terms of query execution.

See the comments on performance and conclusions by Allen Browne in his article http://allenbrowne.com/subquery-02.html.

However, subqueries can also be very useful

I use them when there isn't another good method of achieving the same results.

JET ShowPlan doesn't handle subqueries at all. So it only gave a partial picture of what was happening with the subquery version.

I agree with Pat's comments in the last post. Subqueries are inefficient in terms of query execution.

See the comments on performance and conclusions by Allen Browne in his article http://allenbrowne.com/subquery-02.html.

However, subqueries can also be very useful

I use them when there isn't another good method of achieving the same results.

Similar threads

- Replies

- 18

- Views

- 1,437

- Replies

- 8

- Views

- 1,364

- Replies

- 17

- Views

- 1,790

Users who are viewing this thread

Total: 1 (members: 0, guests: 1)