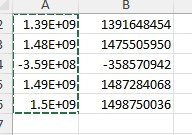

First question: If a CSV is required for an import into the SAP system and a CSV is created for the export from the Access application - where and why does Excel come into play?Just to clarify the scope of all of this, maybe there is another solution: actually I'm forced to prepare a CSV template file because it is required by the SAP functional team who upload data into the system trough a program who read the CSV File. So, as i explained, i export my data from my ms access database in to a CSV file. The team who receive my template (CSV file) , simple take the CSV file and import in their application but when they try to import it they get duplication error due to the "wrong" transformation of those long numbers.

Second question: If the CSV is opened in Excel out of curiosity or playfulness, the CSV itself does not change unless explicit steps are taken to save it back. Do the actors involved not know what they are doing? Remove blindfold, tie hands?

An XLSX is not a CSV - do we need to discuss this further?

Third question: If a CSV is requested for an import, why can't the CSV be handled there? Do “experts” have to be replaced?

If you write text to a text file and then import text into a text field, there can be no reformatting unless interference is explicitly caused.